Hadoop部署

Hadoop的部署步骤(暂时仅有win版单机部署)

首先声明:对于大多数错误,首先确认JDK和Hadoop版本,然后才是winutils版本、配置信息。我默认你能看懂输出和报错。

下载JDK和Hadoop

本文使用JDK11和Hadoop3.3.5,后面不再说明。之前使用了JDK17,但是在运行时报错很多,最后卡在了这里无法解决:

ERROR nodemanager.NodeManager: Error starting NodeManager

java.lang.ExceptionInInitializerError

at com.google.inject.internal.cglib.reflect.$FastClassEmitter.<init>(FastClassEmitter.java:67)

at com.google.inject.internal.cglib.reflect.$FastClass$Generator.generateClass(FastClass.java:72)

at com.google.inject.internal.cglib.core.$DefaultGeneratorStrategy.generate(DefaultGeneratorStrategy.java:25)

at com.google.inject.internal.cglib.core.$AbstractClassGenerator.create(AbstractClassGenerator.java:216)

at com.google.inject.internal.cglib.reflect.$FastClass$Generator.create(FastClass.java:64)

at com.google.inject.internal.BytecodeGen.newFastClass(BytecodeGen.java:204)

at com.google.inject.internal.ProviderMethod$FastClassProviderMethod.<init>(ProviderMethod.java:256)

at com.google.inject.internal.ProviderMethod.create(ProviderMethod.java:71)

at com.google.inject.internal.ProviderMethodsModule.createProviderMethod(ProviderMethodsModule.java:275)

at com.google.inject.internal.ProviderMethodsModule.getProviderMethods(ProviderMethodsModule.java:144)

at com.google.inject.internal.ProviderMethodsModule.configure(ProviderMethodsModule.java:123)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:340)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:349)

at com.google.inject.AbstractModule.install(AbstractModule.java:122)

at com.google.inject.servlet.ServletModule.configure(ServletModule.java:52)

at com.google.inject.AbstractModule.configure(AbstractModule.java:62)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:340)

at com.google.inject.spi.Elements.getElements(Elements.java:110)

at com.google.inject.internal.InjectorShell$Builder.build(InjectorShell.java:138)

at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:104)

at com.google.inject.Guice.createInjector(Guice.java:96)

at com.google.inject.Guice.createInjector(Guice.java:73)

at com.google.inject.Guice.createInjector(Guice.java:62)

at org.apache.hadoop.yarn.webapp.WebApps$Builder.build(WebApps.java:417)

at org.apache.hadoop.yarn.webapp.WebApps$Builder.start(WebApps.java:465)

at org.apache.hadoop.yarn.webapp.WebApps$Builder.start(WebApps.java:461)

at org.apache.hadoop.yarn.server.nodemanager.webapp.WebServer.serviceStart(WebServer.java:125)

at org.apache.hadoop.service.AbstractService.start(AbstractService.java:194)

at org.apache.hadoop.service.CompositeService.serviceStart(CompositeService.java:122)

at org.apache.hadoop.service.AbstractService.start(AbstractService.java:194)

at org.apache.hadoop.yarn.server.nodemanager.NodeManager.initAndStartNodeManager(NodeManager.java:963)

at org.apache.hadoop.yarn.server.nodemanager.NodeManager.main(NodeManager.java:1042)

Caused by: java.lang.reflect.InaccessibleObjectException: Unable to make protected final java.lang.Class java.lang.ClassLoader.defineClass(java.lang.String,byte[],int,int,java.security.ProtectionDomain) throws java.lang.ClassFormatError accessible: module java.base does not "opens java.lang" to unnamed module @6025e1b6

at java.base/java.lang.reflect.AccessibleObject.checkCanSetAccessible(AccessibleObject.java:354)

at java.base/java.lang.reflect.AccessibleObject.checkCanSetAccessible(AccessibleObject.java:297)

at java.base/java.lang.reflect.Method.checkCanSetAccessible(Method.java:199)

at java.base/java.lang.reflect.Method.setAccessible(Method.java:193)

at com.google.inject.internal.cglib.core.$ReflectUtils$2.run(ReflectUtils.java:56)

at java.base/java.security.AccessController.doPrivileged(AccessController.java:318)

at com.google.inject.internal.cglib.core.$ReflectUtils.<clinit>(ReflectUtils.java:46)

... 32 more

2023-10-12 15:56:20,882 INFO ipc.Server: Stopping server on 8122

2023-10-12 15:56:20,882 INFO ipc.Server: Stopping IPC Server listener on 0

2023-10-12 15:56:20,882 INFO ipc.Server: Stopping IPC Server Responder

2023-10-12 15:56:20,883 WARN monitor.ContainersMonitorImpl: org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl is interrupted. Exiting.

2023-10-12 15:56:20,889 INFO ipc.Server: Stopping server on 8040

2023-10-12 15:56:20,890 INFO ipc.Server: Stopping IPC Server listener on 8040

2023-10-12 15:56:20,890 INFO ipc.Server: Stopping IPC Server Responder

2023-10-12 15:56:20,890 WARN nodemanager.NodeResourceMonitorImpl: org.apache.hadoop.yarn.server.nodemanager.NodeResourceMonitorImpl is interrupted. Exiting.

2023-10-12 15:56:20,890 INFO localizer.ResourceLocalizationService: Public cache exiting

2023-10-12 15:56:20,891 INFO impl.MetricsSystemImpl: Stopping NodeManager metrics system...

2023-10-12 15:56:20,892 INFO impl.MetricsSystemImpl: NodeManager metrics system stopped.

2023-10-12 15:56:20,892 INFO impl.MetricsSystemImpl: NodeManager metrics system shutdown complete.

2023-10-12 15:56:20,893 INFO nodemanager.NodeManager: SHUTDOWN_MSG:大概就是guice包用到的反射机制在JDK17被限制了,我尝试添加jvm参数:--add-opens java.base/java.lang=ALL-UNNAMED也没用,同时JDK17也取消了--illegal-access=permit的使用。目前我暂时没找到如何在JDK17上运行Hadoop。

下载winutils

下载对应自己Hadoop版本的winutils

添加环境变量

添加HADOOP_HOME和HADOOP_SUER_NAME配置

- HADOOP_NAME=“你的Hadoop存放目录,例如D:/envs/hadoop-3.3.5”

- HADOOP_SUER_NAME=“root”

- JAVA_HOME=“你的JDK路径”,如果你本来就有JDK,但是版本是17这些高版本,也不需要修改,具体看后面操作。

在path中添加:

- %JAVA_HOME%\bin

- %JAVA_HOME%\jre\bin

- %HADOOP_HOME%\bin

- %HADOOP_HOME%\sbin

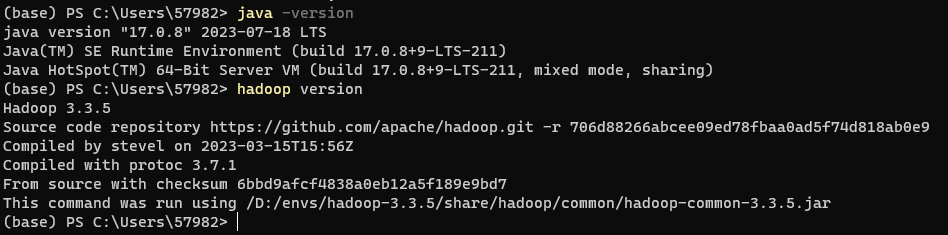

检测是否安装成功

命令行运行如下命令:

java -version

hadoop version

应当形如:

修改一些Hadoop配置文件

etc/hadoop文件夹中

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<!-- 使用localhost来指定你的本机 -->

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<!-- 保证此目录存在且具有适当的权限 -->

<value>/D:/envs/hadoop-3.3.5/tmp</value>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<!-- 使用root可能不是最佳做法,除非你有明确的原因这样做。考虑使用普通用户帐号 -->

<value>root</value>

</property>

</configuration>

mapreduce-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>hdfs-site.xml

在Hadoop目录下创建data目录

在刚才创建的data目录下创建namenode目录

<configuration>

<!-- 这个参数设置为1,因为是单机版hadoop -->

<property>

<name>dfs.replication </name>

<value>1</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/D:/envs/hadoop-3.3.5/data/namenode</value>

</property>

<property>

<name>fs.checkpoint.dir</name>

<value>/D:/envs/hadoop-3.3.5/data/snn</value>

</property>

<property>

<name>fs.checkpoint.edits.dir</name>

<value>/D:/envs/hadoop-3.3.5/data/snn</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/D:/envs/hadoop-3.3.5/data/datanode</value>

</property>

</configuration>yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>hadoop-env.cmd

找到set JAVA_HOME=%JAVA_HOME一行,如果你本来就是JAVA8或者11,不需要改动,如果是17这类,修改为刚才下载的JDK11路径,例如:set JAVA_HOME=%C:\Java\jdk-11%

使用winutils替换bin目录

复制粘贴就行,版本对上就ok

格式化存储目录

命令行输入:

hdfs namenode -format

至此,应当完成配置了

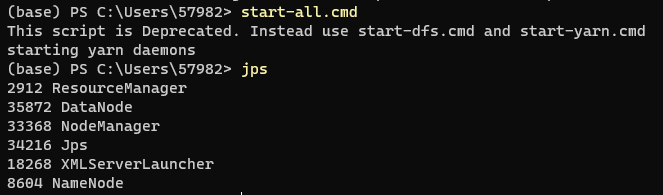

Hadoop,启动

命令行输入start-all.cmd,通过jps应当可以查看到这几个服务:

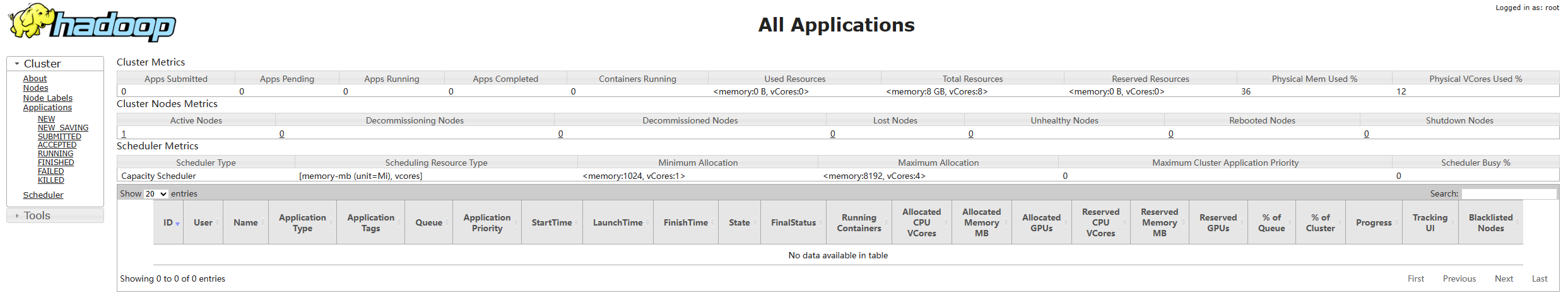

服务管理

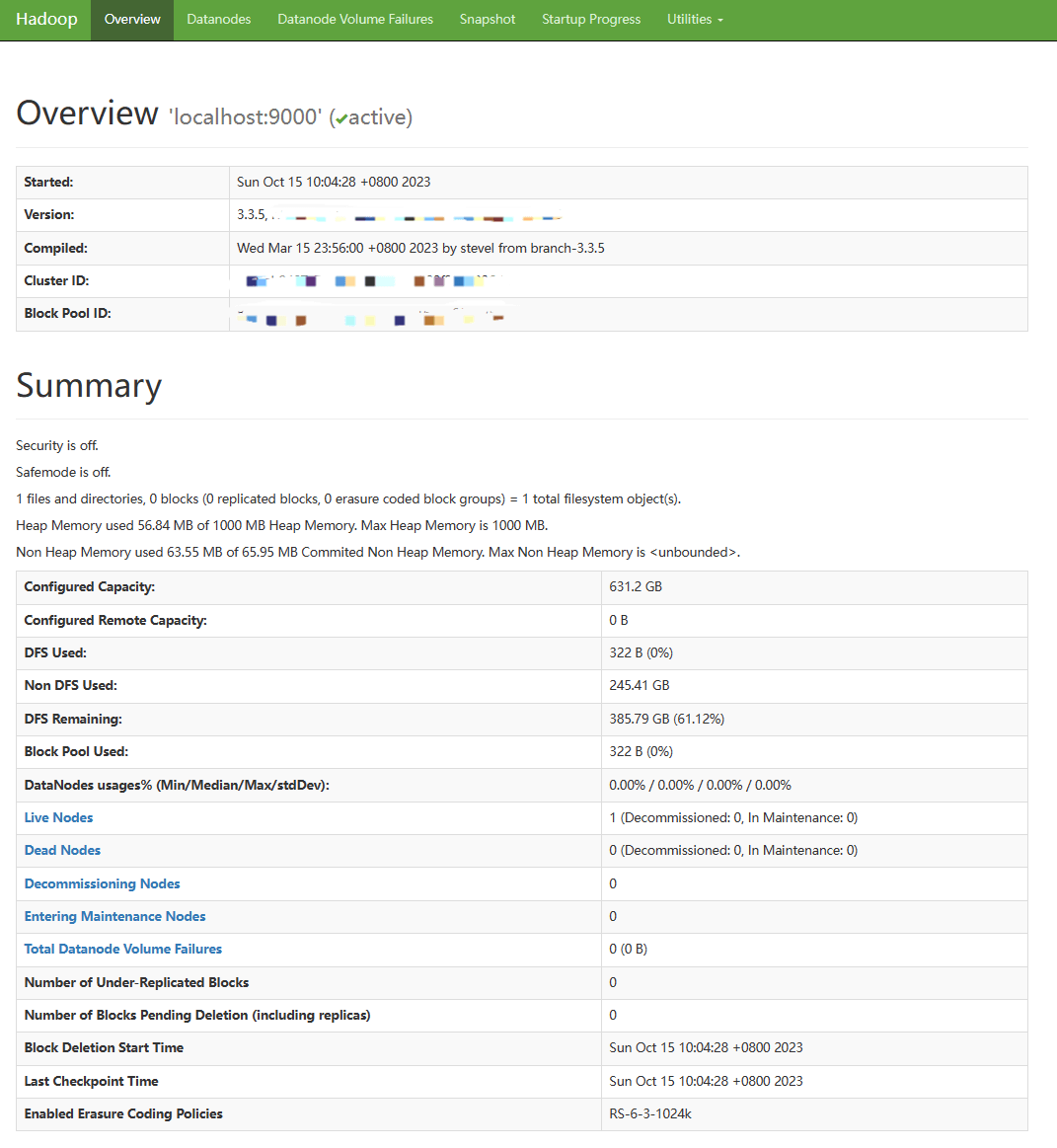

查看集群节点状态

查看文件管理页面